Say hello to my digital Leonardo Da Vinci — a lifelike, interactive AI character built in Unreal Engine using Metahuman technology. This project is part of my final thesis in the MadTech master’s program at NC State.

Overview

Using the technology of Unreal Engine 5.5, Metahuman, Convai, and Metatailor, I bring Leonardo Da Vinci into the virtual world as a virtual human — not just as a 3D model, but as someone you can actually talk to. He is designed as an Embodied Conversational Agent (ECA), meaning he can respond to you in real time with words, gestures, and expressions. This virtual Leonardo can answer questions, tell stories, and talk about his inventions while reacting with human-like presence and emotion.

Embodied Conversational Agent (ECA)

The concept of an Embodied Conversational Agent (ECA) comes from research in Human-Computer Interaction (HCI) and AI communication. ECAs are virtual characters that engage in face-to-face conversation with users, using both verbal and non-verbal communication — just like humans do. They combine speech, facial expression, gaze, and body movement to create a more intuitive and immersive interaction experience.

In my project, Leonardo is more than a chatbot with a face. He is an ECA with spatial awareness, memory, personality, and emotional nuance. The goal is to make the interaction feel less like operating software and more like talking to a real person. This theoretical framework helps guide both the design decisions and the technical choices I make in creating a responsive, believable character.

Metahuman

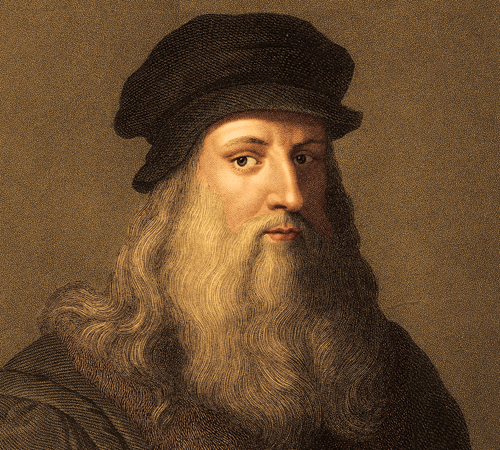

Leonardo was created using Metahuman Creator, a powerful tool for building high-fidelity digital humans. I carefully modeled his facial features to resemble the famous Renaissance artist, guided by historical paintings and sketches. The result is a stylized but recognizable version of Da Vinci, aged and wise, with expressive facial rigging enabled for real-time animation.

Leonardo Da Vinci

Leonardo da Vinci (1452–1519) was a true Renaissance polymath — a painter, engineer, architect, anatomist, and inventor. He is widely known for masterpieces such as the Mona Lisa and The Last Supper, as well as for his visionary sketches of flying machines, anatomical studies, and mechanical inventions. His insatiable curiosity and creative genius made him one of the most influential figures in both art and science.

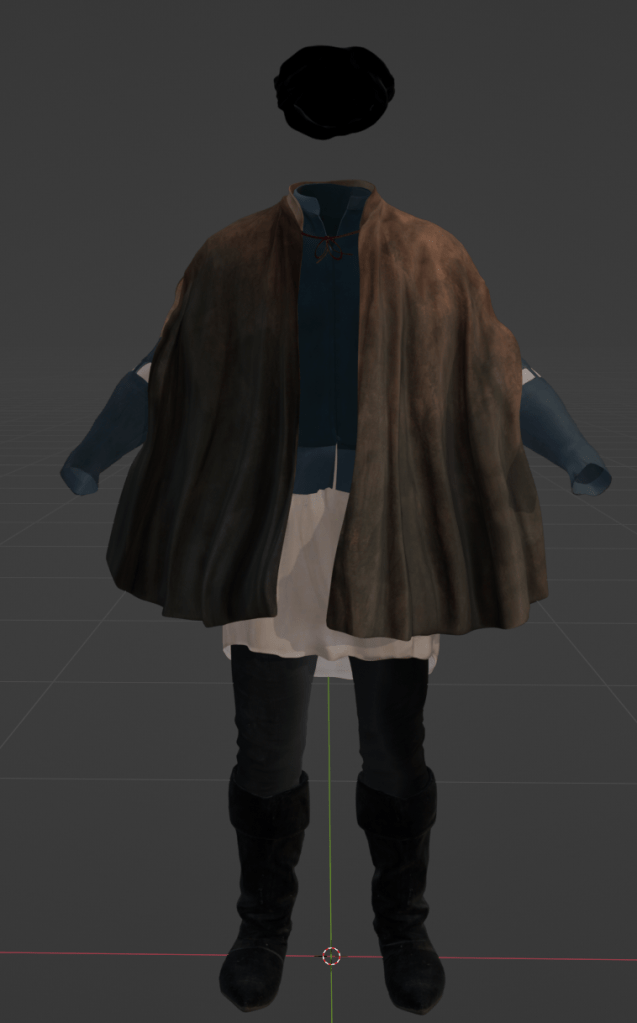

Clothing and Physics Simulation

Leonardo’s Renaissance-era outfit was created using a high-quality 3D clothing mesh with textures, acquired from Peris Digital, a Spanish company that specializes in scanning historical costumes. I imported the scanned outfit into MetaTailor, where I shrink-wrapped the garments to fit the Metahuman base mesh. This allowed me to preserve the historical accuracy of the costume while adapting it for use with the Metahuman character.

While MetaTailor did a great job adapting the scanned Renaissance outfit to the Metahuman base mesh, it wasn’t equipped to handle physics simulation. The original 3D clothing mesh from Peris Digital was extremely high poly, making it unsuitable for real-time cloth simulation in Unreal Engine.

To solve this, I created a lower-resolution proxy mesh specifically for cloth physics. I used a third-party Blender plugin called Retopo Planes to retopologize the coat and generate a simplified version optimized for simulation. This made it possible to simulate natural cloth movement while keeping the system lightweight and responsive in real-time.

After preparing the meshes, I imported both the detailed and proxy versions into Unreal Engine. I then bound the clothing to the Metahuman base skeleton and activated the clothing physics system to allow realistic drape, movement, and interaction with Leonardo’s animations. The result is a dynamic costume that flows naturally with his gestures and adds a tactile sense of realism to the experience.

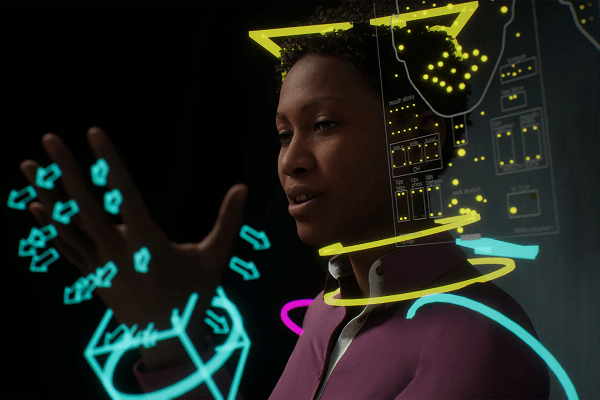

Convai

To drive real-time conversation, I used the Convai platform, which allows for AI-powered dialogue with contextual memory and emotional response. It also enables spatial interaction, allowing Leonardo to look at you, track movement, and respond as if he’s aware of the environment around him. This bridges the gap between digital avatars and truly interactive characters.

In designing the AI for Leonardo, I carefully calibrated his personality to reflect curiosity, wisdom, and warmth—traits associated with the historical figure. His conversational abilities are powered by Convai and enhanced with a curated knowledge base, allowing him to respond with not just factual accuracy but also with emotional nuance and a sense of historical character. Leonardo can track a user’s position, respond in real-time, and engage in meaningful, human-like dialogue.

Knowledge Bank

To give Leonardo the ability to speak with depth and context, I uploaded several curated resources as part of his knowledge bank. These include selections from Da Vinci’s notebooks, the Codex Leicester, and other collections that reflect his thoughts on art, science, anatomy, and philosophy. I also included references from Art and Life to add narrative richness to his dialogue. These documents serve as training data that allow Leonardo to speak knowledgeably about his own life, beliefs, and inventions, offering users an immersive way to explore Renaissance history through conversation.

Conclusion

This project brings together design, AI, storytelling, and real-time animation to explore what’s possible when we blend creativity with cutting-edge technology. The result is an experience that is not only educational and entertaining but also a step toward the future of human-AI interaction.

Instructors

- Topher Maraffi: Assistant Professor of Media Arts, Design and Technology

- Patrick FitzGerald: Associate Professor of Media Arts, Design and Technology, Director of Graduate Programs in Media, Arts, Design and Technology

- Justin Johnson:Assistant Professor, Media Arts, Design and Technology

Leave a comment